Efficiency is one of Kubernetes top benefits, yet companies adopting it often experience high infrastructure costs and performance issues, with applications failing to match latency SLOs. Even for experienced Performance Engineers and SREs, sizing of resource requests and limits to ensure application SLOs can be a real challenge due to the complexity of Kubernetes resource management mechanisms.

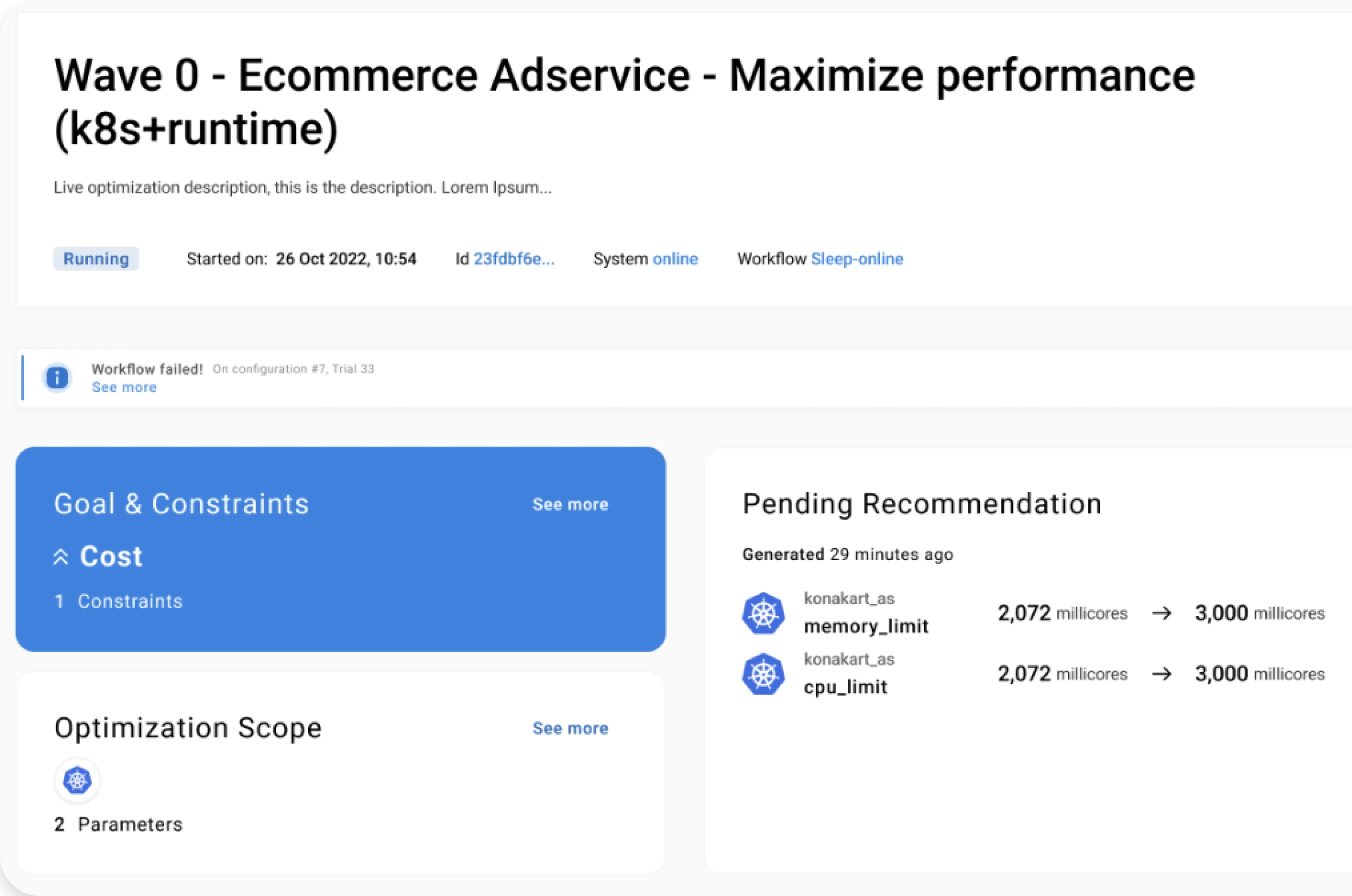

In this talk at Tricentis Performance Advisory Council (PAC) 2021 on October 7th, Stefano Doni (CTO at Akamas) describes how AI techniques help optimize Kubernetes and match SLOs.

The first part of the presentation covers the Kubernetes resource management concepts from a performance and reliability perspective. In the second part of the talk, the AI techniques are applied to a real-world cloud-native application to achieve the perfect balance among low costs and optimal application performance and reliability.